Has it really been four months since ChatGPT smashed its way into the digital landscape, sending the tech world (well, let’s be honest, the whole world) into a frenzy?

‘It’s nothing new!’ piped up various AI boffins, including Meta’s own chief AI scientist Yann LeCun (can’t possibly imagine why Meta are feeling bitter), but the truth is – what ChatGPT achieved and continues to achieve *is* new. The likes of Google, Microsoft and Meta may well have had the ability and resources to launch a similar product way before OpenAI – but they didn’t. The goliaths were caught napping by a moderately small team of AI researchers and engineers based in San Francisco, and thus, ChatGPT was born.

While most of us were still catching our breath from GPT-3 (it writes code! It creates poems! It can hold a conversation! etc…), OpenAI upped its game once again with the launch of GPT-4.

What is GPT-4?

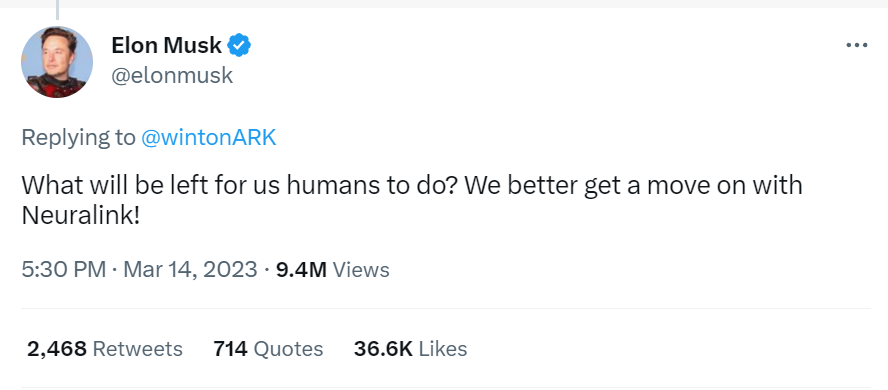

Released on 14th March, GPT-4 is OpenAI's newest wunderkind and the most extraordinary AI model to date from the masterminds behind ChatGPT and Dall-E. This system possesses the capacity to conquer the US bar exam, decipher perplexing logic riddles and ingeniously provide you with some simple, tailor-made recipes having analysed a picture of the contents of your fridge or food cupboard. Its potential is so huge, it’s even got Elon Musk worried.

How does it differ from GPT-3?

In terms of general Q&A style conversations, the distinction between GPT-3 and GPT-4 can be pretty subtle. Oh, and its poetry skills still leave a lot to be desired. But those things aside, GPT-4’s skills vastly surpass that of its older sibling. Here’s a taster of what it can do:

Processes eightfold more words than its predecessor

GPT-4 can process up to 25,000 words simultaneously, which is an 8x improvement over GPT-3’s capabilities. This enhancement enables GPT-4 to handle larger documents, increasing its efficiency in various work settings. With its ability to manage substantially more text, GPT-4 can support a broader range of applications, including long-form content creation, document search and analysis, along with extended conversations.

Exhibits enhanced stability

ChatGPT, Google’s Bard prototype and Bing Chat have all faced criticism for occasionally producing unexpected, incorrect or even disturbing outputs. However, OpenAI has poured six months’ work into training its new model, leveraging insights from its “adversarial testing program” and ChatGPT. This has culminated in the platform achieving its most impressive outcomes to date in terms of factuality, controllability and adherence to established boundaries.

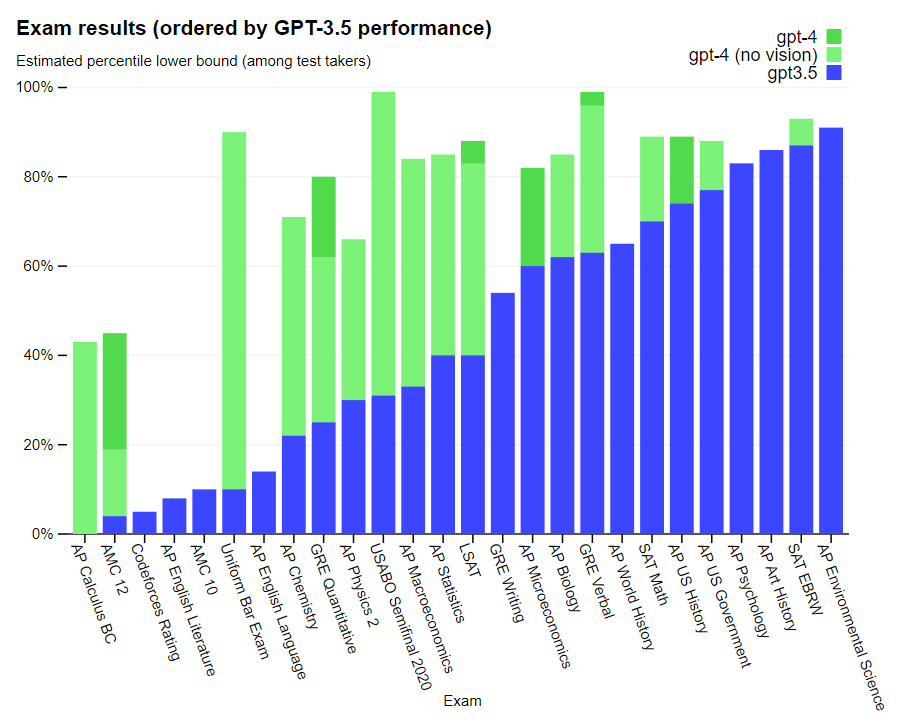

It’s way smarter than 3.5

It’s also most likely way smarter than you. During a showcasing demo, OpenAI highlighted that GPT-4 had outperformed 90% of humans in some of the toughest exams in the US, achieving a 93rd percentile score on an SAT reading test and a 90th percentile score for the Uniform Bar exam.

That said, it’s not too hot on English Literature, so perhaps anyone relying on GPT-4 to write an insightful essay exploring how Jane Austen used parody and irony to critique the portrayal of women in late 18th-century society may be greeted with a D- and a letter home to Mum.

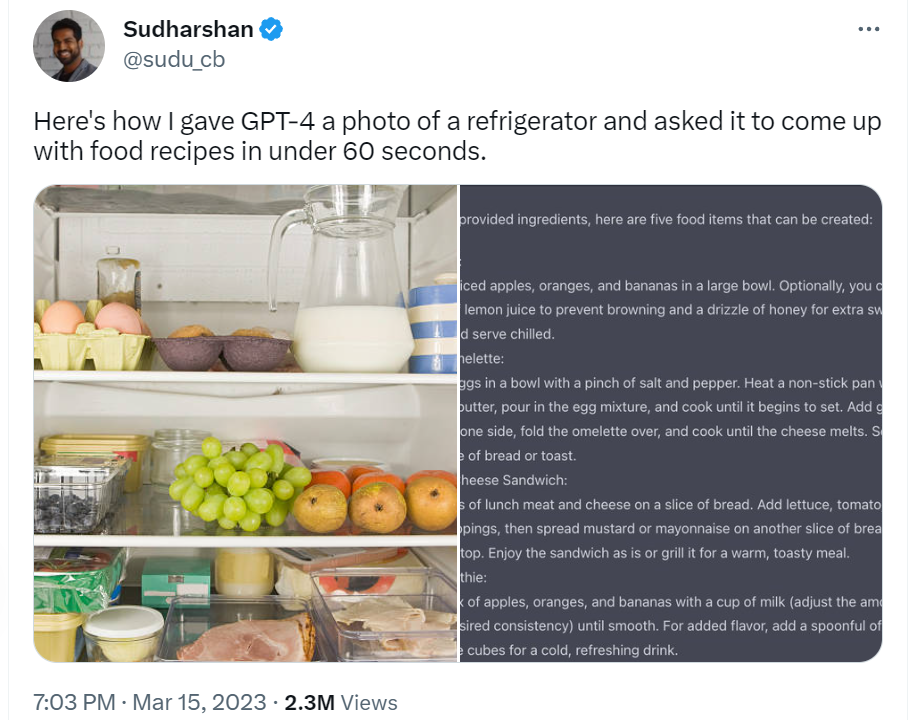

Reads and analyses images

Possibly GPT-4’s most notable enhancement is its ‘multimodal’ capability, enabling it to work with both text and images. While it can’t generate images like DALL-E, what it can do is process and respond to visual inputs. This development in particular has been causing quite a storm over on Twitter – the now-viral refrigerator tweet being one prime example:

Those scrambling to try out this skill in the hope of finding delicious recipe inspiration for a fridge containing two eggs, a can of Fanta and a half-eaten peperami are going to be disappointed….at least in the short term. The multimodal version is yet to be rolled out to GPT-4 subscribers and, at present, OpenAI are only collaborating with one partner to prepare the image input capability for eventual wider availability.

That single partner is Be My Eyes – a free app for users who are blind or have low vision which is powering its new feature – the Virtual Volunteer – with GPT-4 technology. This new feature will be integrated into the existing app and contain a dynamic new image-to-text generator, allowing users to receive real time identification, interpretation and conversational visual assistance for a wide variety of tasks such as map-reading, translating foreign ingredients on a food label, identifying different items in shops or describing clothes in a catalogue.

Transforms drawings into a functioning websites

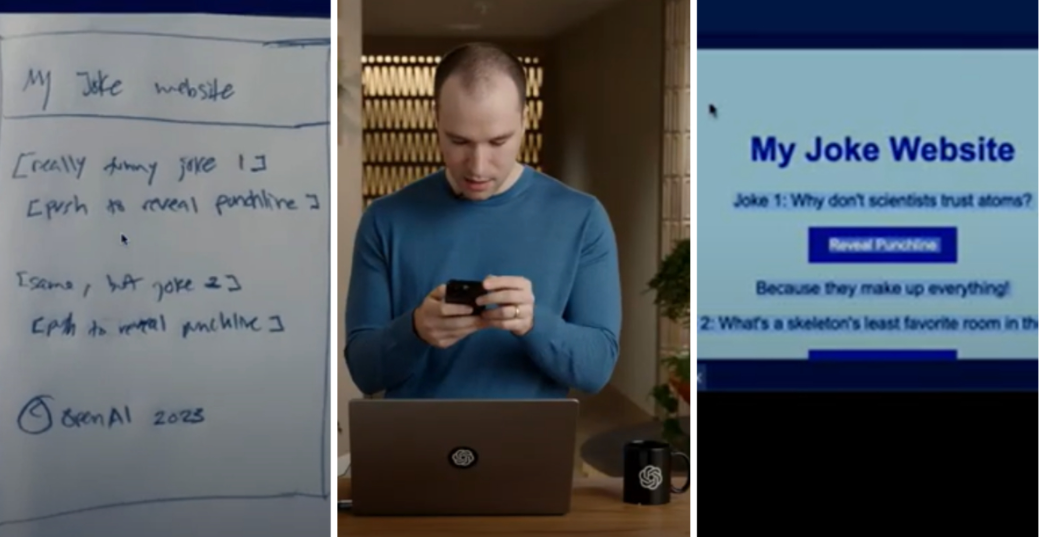

In a recent demo video, Greg Brockman, president and co-founder of OpenAI, showcased GPT-4’s ability to transform a simple hand-drawn sketch into a fully operational website.

Brockman shared a photo of some scribbles labelled ‘My Joke Website’ containing a couple of jokes. He then instructed GPT-4 to “generate concise HTML/JS code to convert this prototype into a vibrant website, where the jokes are substituted with two authentic jokes.”

The AI promptly generated functional JavaScript code. This code was then utilised to bring the website to life.

So what are the down sides?

Impressed? You should be. But while the jazz hands are warranted, there is – as you would expect – the odd fly in the AI ointment.

Responses can still be iffy

Despite OpenAI’s significant investment into ensuring results are improved, GPT-4 still has the ability to ‘hallucinate’ – i.e. provide incorrect or nonsensical answers to questions. Similar to its predecessor, GPT-4 has no access to external sources of truth or verification, and relies only on its internal knowledge and context, something which Greg Brockman was keen to reiterate in a recent interview with TechCrunch:

“There’s still a lot of problems and mistakes that [the model] makes … but you can really see the jump in skill in things like calculus or law, where it went from being really bad at certain domains to actually quite good relative to humans.”

Greg Brockman

Open AI

It’s not free

While ChatGPT is free of charge, GPT-4 can only be accessed via the platform’s $20 a month ChatGPT Plus subscription service. With the bells and whistles multimodal version still widely unavailable, the differences between this paid version and the free one are not abundantly obvious – at least not for occasional users predominantly interested in its Q&A capabilities, thus potentially leaving new subscribers wondering whether the $20 fee is worth it right now. There’s also currently a cap on the number of messages that can be inputted in a three hour period, and an even lower cap is expected as interest grows.

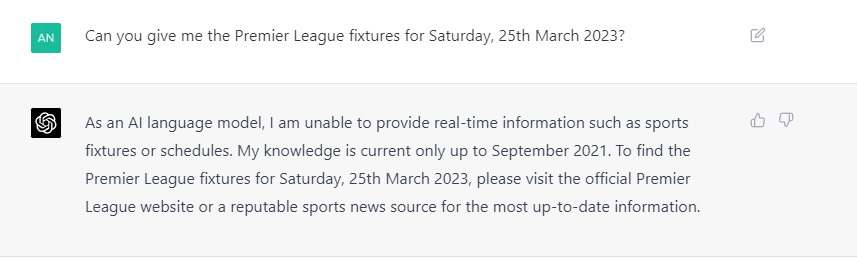

It can only provide info up to September 2021

Like its predecessor, GPT-4 can only provide data up to September 2021, putting it way behind the likes of Bing Chat which offers bang up to date info (the irony being that Bing Chat is actually powered by GPT-4). This makes the model pretty redundant for requests such as the one below:

Final thoughts

Not being able to use the multimodal function right now is frustrating to say the least, as this is where I believe GPT-4 will really be a game changer for so many users. Hopefully it won’t be long before this article can be updated with my own fridge recipes and a cornucopia of other image-related experiments. That said, I’m prepared to be patient. If the last few months have taught us anything, it’s that we’re entering a glorious and golden era of AI innovation. I sense this might just be the beginning of a rather extraordinary journey…and I’m happy to come along for the ride.